Let's talk AI

Expertise

Custom development Mobile applications Tailor‑made business software E‑commerce websites SaaS applications Data & AI Generative AI Computer Vision Predictive systems Data Engineering Product strategy Product discovery UX Research UX/UI Design Consulting & Transformation Cloud & DevOps Digital transformation

Technical expertise

React / React Native Node.js / TypeScript Angular Python PHP Flutter .NET Native mobile developmentExpertise

Team MethodCustom development Mobile applications Tailor‑made business software E‑commerce websites SaaS applications Product strategy Product discovery UX Research UX/UI Design

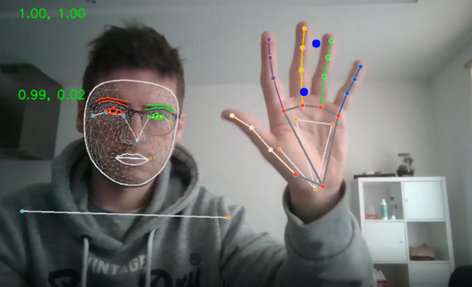

Data & AI Generative AI Computer Vision Predictive systems Data Engineering Consulting & Transformation Cloud & DevOps Digital transformation

Technical expertise

React / React Native Node.js / TypeScript Angular Python PHP Flutter .NET Native mobile development